Note: this article is inspired by Sara Robinson on her tweet and a short 5 min video explanation is uploaded by Google Cloud Platform on Youtube. This article will explain the tools and their usefulness in detail.

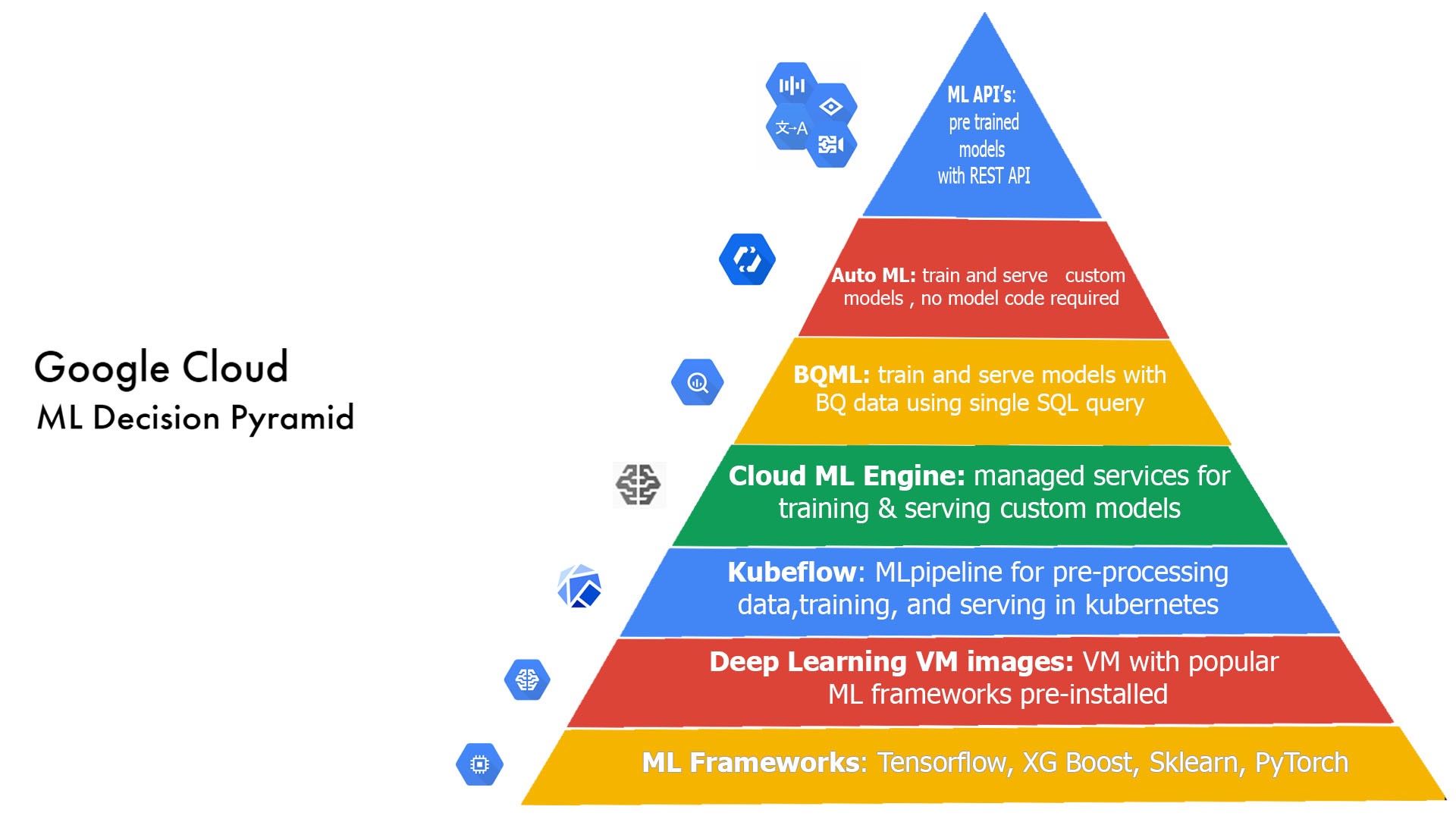

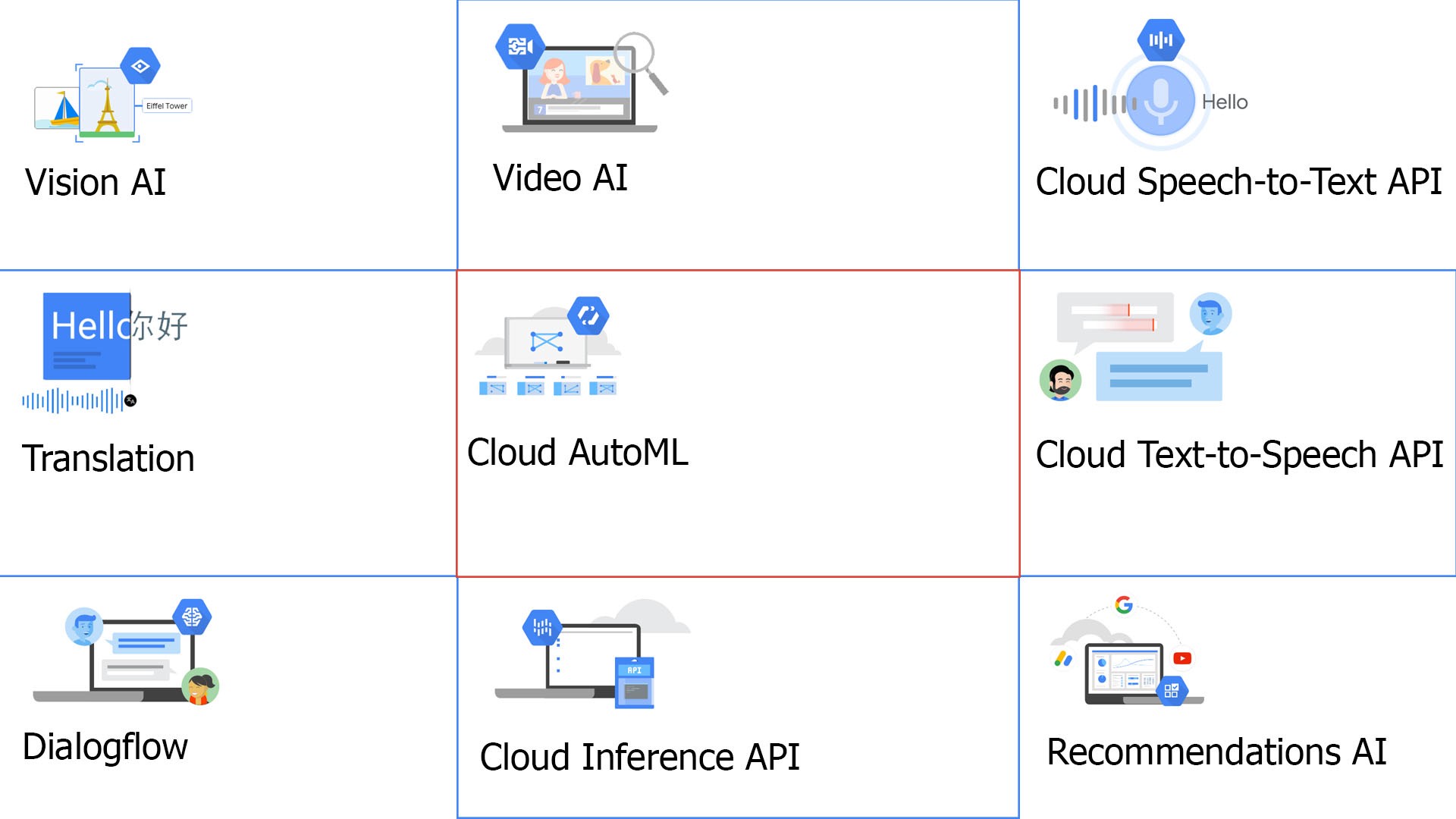

As more and more development and research are been done in ML/ AI space, more people are interested to take leverage of it. And as people from different background are interested in this field, it is no doubt, ML service providers will make more and more simple “sophisticated” tools that will tap to a wide range of users. If we take a step back in all of these we can also think, if Machine Learning is becoming simple day-by-day, there is a possibility that companies/organizations/ individuals won't be relying on Data Scientists to make ML models and the “hype” this sector has created among individuals can burst. This is just a speculation and if true we are very far away to completely rely on a computer for ML tasks… or are we?

For any clarification, comment, help, appreciation or suggestions just post it in comments and I will help you in that. If you liked it you can follow me on medium.

Also, you can connect me on my social media if you want more content based on Data Science, Artificial Intelligence or Data Pipeline.

And coffee's on me ☕.